The Serverless Revolution: Why and How The Movement Will Allow Teams to Deploy With More Velocity and Confidence

Drive DevOps success with better, more informed decisions based on real-time facts, not opinions.

The serverless movement has recently received another jolt of momentum with Google’s recent release of KNative. Leveraging some of the hottest technologies including Kubernetes and Istio, KNative raises the bar of serverless by supporting a wide set of platforms including Pivotal Cloud Foundry and Red Hat OpenShift. With such a set of heavy hitters, if you are not onboard with serverless, you’ll be left behind, right?

The Rise of Serverless

When building a new application, adding additional features, or preparing for a spike in usage, capacity planning is an important part of the application lifecycle. Part science and part art, determining infrastructure needs while negotiating with stakeholders is not a frivolous activity. From a development standpoint, software engineers strive to make their solution the most efficient—from code reviews, code-coverage tools and APM solutions to help profile their applications to take the least amount of resources.

Building the algorithms/functions is usually just half of the equation for a software engineer. The other half is navigating the application infrastructure. In the Java world, tuning the application server/Java runtime is not uncommon. Having an understanding of what your application is doing is just as important as where/what is running the application.

The other half of where/what is that running your application takes away from the core innovation work that software engineers strive for. Imagine a paradigm where you can just focus on the function or business logic without having to worry too much about where your application is running, or even scaling the function. You are not alone, thus the serverless boom is underway.

The Mighty Lambda

For those beginning their serverless journey, a popular starting point is AWS Lambda. Amazon introduced its Lambda service in November 2014. The premise of a function (in this case, a Lambda function) is triggered by an event; this is an event-driven architecture. Though in Amazon’s case, the underlying compute is managed by an AWS service.

Your First Lambda

Amazon has good documentation on creating your first Lambda function. Another source I used to get up to speed is FreeCodeCamp, which has a detailed step-by-step on making a NodeJS Lambda to determine if a string is a palindrome.

The ecosystem pieces to take into account of a Lambda can be as broken down as:

- Trigger/invoke the Lambda: An event needs to trigger a Lambda. AWS provides a SDK in several languages to invoke a Lambda. Infrastructure can be placed in front of the Lambda—for example, a message broker to queue up events to be processed.

- Scale/react to Lambda demand: As demand for the function increases, the Lambda has the ability to scale elastically. Understanding how a Lambda runs concurrently, as well as the pricing implications, are important considerations.

- Output—what your Lambda is all about: Like any event-driven architecture, a response or another event is expected. Output can be written to a downstream system such as ElastiCache, or returned as a simple response. Logging becomes even more important with Lambdas. This is due to the fact that the application infrastructure logging that engineers are used to on-prem is not there. In the case of a Java Lambda, from an engineering prospective configuring Log4J is not terribly different than in the non-serverless world.

So Why Aren’t Our Servers at the Bottom of a Lake?

Because serverless, like every other technology architecture, has its pros and cons. Using a cloud vendor’s function service can lead to rough edges around portability and observability. The old adage, “The cloud is just someone else’s computer,” is sage advice, especially when it comes to serverless. After all, your function still has to run somewhere.

Portability

After the success of Amazon’s Lambda, other major cloud vendors have rolled out their renditions of function services, including Google Cloud and Azure. One major drawback of investing too heavily into one cloud service SDK is the increased difficulty of running the service somewhere else (i.e., lock-in). One might argue that “Java is Java” and “I did not import anything from com.amazonaws, so pound sand, author.” Salient and good points, but don’t forget the surrounding ecosystem to trigger, scale, and monitor the Lambda. To achieve greater portability, imagine having three sets of deployment scripts, one per major public cloud vendor, with tests/mocks for each and every time there is change.

Observability

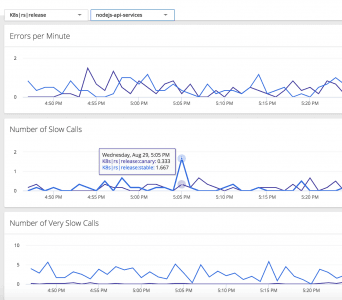

One of the biggest objections to serverless focuses on observability. What is observability? Given an output, how well did a system perform. Going back to FreeCodeCamp’s palindrome example, if a string is a palindrome, how efficient was the system in determining the string was a palindrome? As systems become more distributed, we start to run into challenges with observability. Serverless.com has a pretty good roundup of observability tools for the public cloud (or private cloud, in some cases).

The ability to instrument/profile your application infrastructure with the same level of control provided by an on-prem deployment might not be possible on a cloud vendor with multiple tenants. Taking the observability point a step further, there can be inherent challenges with observability; for instance, trying to paint a picture with just logs. We’ve written extensively on AppDynamics’ latest thinking on serverless and Lambda monitoring as well.

Not Only the Public Cloud

Functions are not limited to cloud providers. The enterprise can host its own function-as-a-service infrastructure to provide its internal clients the ability to leverage functions. Open Whisk and Open FaaS are popular alternatives to cloud vendor implementations. Open Whisk can be deployed via Kubernetes and Mesos, too.

KNative, the Holy Grail

There certain has been a lot of buzz around KNative. (Read Google’s initial blog to see how they believe KNative will change the face of serverless computing.) Comparing KNative to other serverless implementations, Google is certainly exposing more of how the sausage is made. By using Kubernetes as the orchestrator and Istio service mesh as the underpinnings of KNative, Google is addressing key concerns involving portability and observability in serverless environments.

How AppDynamics Intersects with KNative

AppDynamics already provides strong integration with Kubernetes-based workloads. And with PaaS providers starting to embrace KNative, AppDynamics has existing integrations with PaaS vendors, too.

All of the potential investment in KNative will allow development teams to deploy with increased velocity and confidence. The ability to have a clear understanding of business/application performance will be crucial for the continued growth of serverless.

Serverless Revolution Continues

With KNative, the needle of serverless moves further towards enterprise legitimacy. As the project starts to garner more attention, the enterprise will take a closer look at serverless and how to address its biggest hurdles around portability and observability.

I’m a big fan of the “Awesome” lists on GitHub. Check out the Awesome Serverless list, where you can see the advances in the serverless revolution.

As technology marches toward application nirvana, where organizations don’t have to worry about the complexities of scaling applications, serverless will be an important part of the equation. Look to AppDynamics to help you navigate this exciting new world!

Drive DevOps success with better, more informed decisions based on real-time facts, not opinions.