Building a Remote Caching System

Last month, our engineering team shipped a large update to how Docker images are cached and stored when using Jet, our Docker platform. In this post, we’ll discuss the motivation for the update, the design and implementation of the feature, and cover some of the tricky engineering problems we faced.

What Is Image Caching Anyway?

Building a service with Docker is generally pretty fast because it uses a layered file system. In your Dockerfile, each instruction is executed and stored as a separate layer. When you rebuild the image, as long as the layer contents are unchanged, Docker will just use the cached layer instead of rebuilding it.

What’s even better is that layers can be shared across multiple images. Having a highly optimized base image, and optimized Dockerfiles, can give you incredible performance benefits.

During Codeship builds, the ability to use cached images is paramount. In most cases, Docker images are based on layers that change very infrequently — the FROM image, package installs, and maybe even a COPY of application directories that are more or less fixed, like config. These are also generally the layers that take the longest to build, and there’s no sense in doing the same work twice.

Codeship faces a unique situation in that our build machines are ephemeral, meaning you get a new one each time you run a build. There’s no image cache to fall back on because the images have never been built before, and during a build run, they only need to be built once.

To solve this, we have to rely on a remote cache source to store image information. In addition to being remote, this cache store also needed to be scoped to each customer, so there’s no chance of Customer A being able to access the build cache of Customer B. It also needs to be fast.

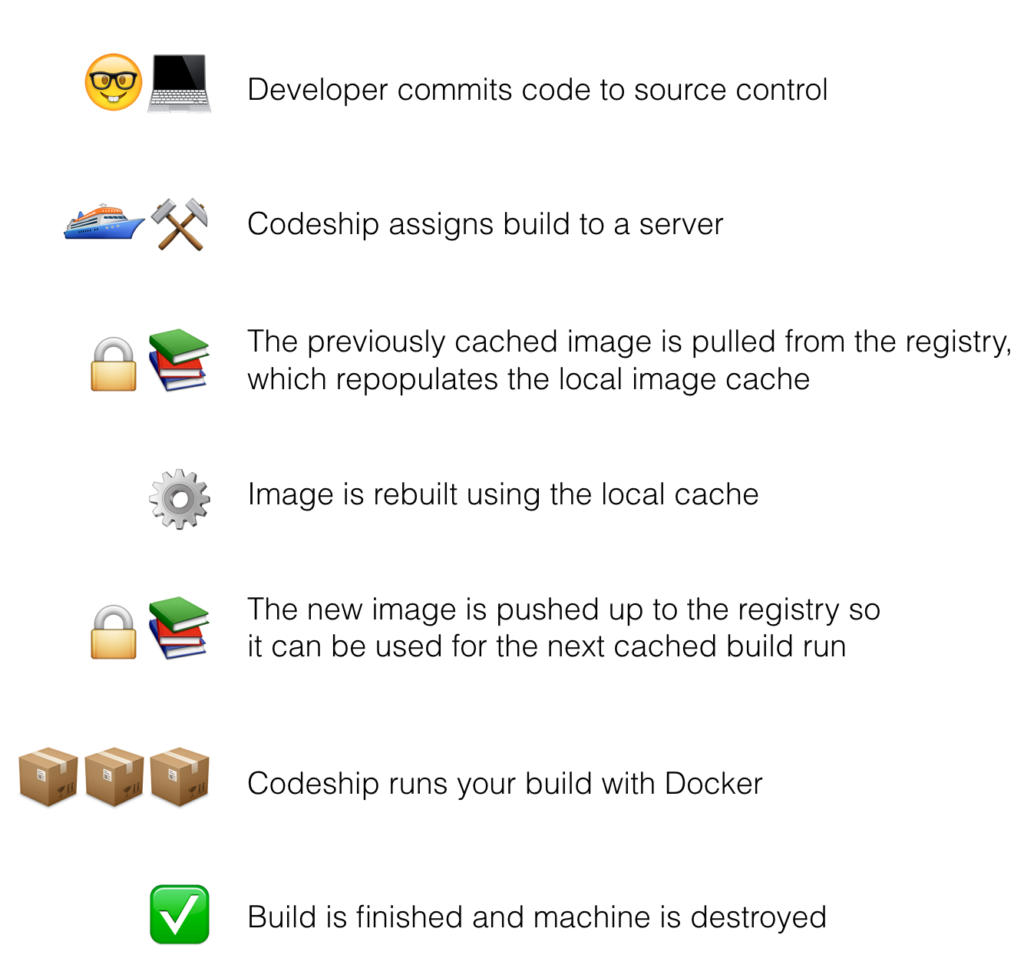

Relying on the Registry for Caching

For a long time, up until Docker 1.10, the Docker registry provided the best solution for remote caching. Using a customer-provided registry, the cached image was stored with a unique tag.

At the start of each build, Jet pulled down the cached image from the registry and then rebuilt the image using the docker build command. Because of the layered file system, Docker only needed to rebuild the layers that had changed when compared to the cached image we just pulled down. This significantly sped up image-build time, since most of the image could fall back to the cache.

For an engineer, this approach was ideal. Not only was it “Docker native” behavior, it was just a couple extra calls to the Docker API. We already needed to parse encrypted credentials for registry push steps, so the engineering effort to implement this remote caching system was minimal.

This approach worked wonderfully, until it didn’t. Caching is such an important and mission-critical feature for our users. Some might argue that we never should have depended on a third party for its implementation, even if that third party was Docker itself.

Everything is broken

Earlier this year, Docker released Engine version 1.10, which included a breaking change related to the way image layers are named and stored. This change implemented content addressable storage.

Previously, layer IDs were random UUIDs dependent on build context. Building the same image on two machines would result in two sets of layer IDs; this was not optimal for a lot of reasons. Content addressable storage updated the naming conventions so that layers with the exact same content also had identical IDs. This provides insight into data integrity on all push, pull, save, and load steps. Instead of relying on the Dockerfile instruction to tell if the contents were identical, you can now rely on the image IDs and digests.

But 1.10 also updated the way that images are distributed from the registry.

While a docker pull my-image would previously give back the parent layer chain, including the IDs of all of the parent layers, in the 1.10 world you only get back a single layer with an ID. This doesn’t repopulate the local image cache in the same way that pre-1.10 pulls would have.

If you want to see for yourself, spin up a Docker host with no images and follow along with this example. We’ll build this Dockerfile as an image called rheinwein/cache-test.

FROM busybox RUN echo hello RUN echo let’s RUN echo make RUN echo some RUN echo layers

$ docker build -t rheinwein/cache-test .

We can see the layer instructions and IDs locally with docker history rheinwein/cache-test:

IMAGE CREATED CREATED BY SIZE COMMENT be5223baa801 40 seconds ago /bin/sh -c echo layers 0 B 5855fcd4e55d 40 seconds ago /bin/sh -c echo some 0 B 46d873a211d7 40 seconds ago /bin/sh -c echo make 0 B cec59edb74ae 41 seconds ago /bin/sh -c echo let’s 0 B 8aaa0dcf27b2 41 seconds ago /bin/sh -c echo hello 0 B 2b8fd9751c4c 5 weeks ago /bin/sh -c #(nop) CMD ["sh"] 0 B <missing> 5 weeks ago /bin/sh -c #(nop) ADD file:9ca60502d646bdd815 1.093 MB

If you rebuild the image again, you’ll see happy little “using cache” messages in the build output. Now purge your images with docker rmi $(docker images -q). Make sure there are no images left on your host.

To see the difference in behavior, try pulling down the image from the Docker Hub and looking at the layers now.

$ docker pull rheinwein/cache-test $ docker history rheinwein/cache-test IMAGE CREATED CREATED BY SIZE COMMENT be5223baa801 3 minutes ago /bin/sh -c echo layers 0 B <missing> 3 minutes ago /bin/sh -c echo some 0 B <missing> 3 minutes ago /bin/sh -c echo make 0 B <missing> 3 minutes ago /bin/sh -c echo let’s 0 B <missing> 3 minutes ago /bin/sh -c echo hello 0 B <missing> 5 weeks ago /bin/sh -c #(nop) CMD ["sh"] 0 B <missing> 5 weeks ago /bin/sh -c #(nop) ADD file:9ca60502d646bdd815 1.093 MB

The good: I know the image is exactly what I expect it to be because the image ID matches what we previously built.

The bad: Try rebuilding this image. Unlike last time, no cache is being used.

Given this new behavior, our remote caching system was completely broken. Because build performance is so important to our customers, we rolled back and stayed on Docker 1.9.2 in order to preserve the caching system. At this point, there was absolutely no way to repopulate the image cache other than using persistent build machines, which would have required a massive and fundamental change to the way that our build systems function.

After a lot of hearty and sometimes heated discussion with other people in the Docker community, Docker Engine did restore some of the parent layer chain functionality in Docker 1.11, but only on save and load events.

So we got to work.

The New Caching System

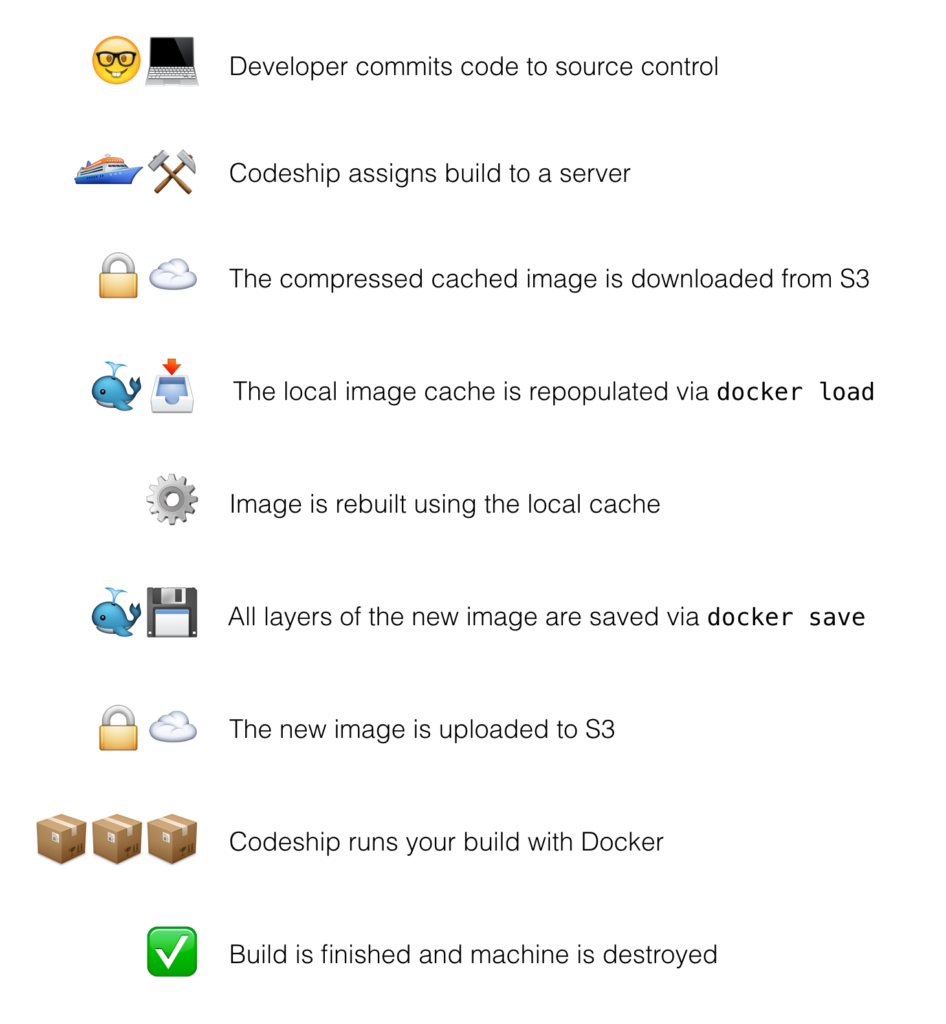

Given the update in 1.11, it was clear that we needed to design a remote caching system to rely on save and load events. Those saved Docker images could then be stored remotely and pulled down before the start of the build. Essentially, the flow is identical to the previous implementation, except the storage component switches from the registry to S3.

Instead of using the registry as our remote storage location, we can use an object store service like S3.

Credentials and security

Since Codeship manages the remote cache S3 buckets, we control credentials and access. Not only we are responsible for issuing access to the buckets during builds, but we’re also responsible for storing objects in a sensible and secure way when they are at rest. In the previous caching system, these functions were the responsibility of the user, because the user needed to define which registry to use for caching, and they had full control over the objects.

Luckily, AWS’s Security Token Service (STS) does most of the heavy lifting for us. During a build, we generate a temporary set of credentials in order for that build to have the ability to get and put objects. Those credentials are scoped to the project itself, and the build only has access to objects for the project it belongs to.

Transparency

Updating our caching system had a lot of benefits, like the fact that customers no longer have to set up private registries just for caching during Codeship builds. But alongside the advantage was one big disadvantage: Customers can no longer see the cached objects outside of a build run, because they are stored in a Codeship-owned bucket.

To give our users more visibility into their cache, each export command includes a log of all image layers and tags included in the exported image. Because layers with identical content have identical IDs, this makes it easier to check to see if the images you use locally match the image that’s being used by Jet during a build on our infrastructure.

In the event that our users need to invalidate their caches for any reason, we also added a cache flusher to our build system. This is a new feature. Previously the users could manually delete cached images from their own repositories.

The cache flush button is located under General Project Settings in your Jet project. It will delete all cached images for the project, and during subsequent build runs, you’ll be able to see that a new cache is being populated. Look for “no cached image for $service” in the service logs to know that the cache has been wiped.

Performance bottlenecks and optimizations

Since rolling out the new caching system last month, our Jet platform has been running smoothly. However, we intentionally shipped the MVP version of this feature and then planned optimizations afterward. There were several pieces of the system that needed some fine-tuning, though unfortunately not all of them were within our control.

There is one big gotcha in the way that parent metadata is persisted using the new Docker save/load system: The entire parent chain needs to be saved out during the docker save command.

It’s not enough to simply say docker save cache-test. You have to include the IDs of the parent image you expect to be saved in a chain. If a layer is saved without its parent present, the metadata is not saved, and that layer is at the end of the chain. This means that we also can’t save customer images (like cache-test) and the base images (like busybox) separately and upload/download them in parallel. In that case, cache-test has no idea it should be looking for a busybox layer, since that metadata isn’t saved.

This results in sometimes huge images. We can assume the best case scenario because the S3 bucket and build machine are both in the same AZ, but it’s not ideal. We’d love to find a way to split up the cached image so we can upload and download in parallel and avoid caching duplicate base images.

The Future

Our main impetus for building a new caching system was so we could upgrade past Docker version 1.10 without having to give up caching. Build time is paramount for our users, so it made sense for us to stay on version 1.9.2 a bit longer than usual in order to build the new caching system.

Now that we’ve been able to upgrade past 1.10 without issue, we’re looking forward to continually shipping improvements to our build platform. Upgrading to 1.10 allows us to support Compose V2 syntax, and work is well underway.

Interested in keeping up with engineering announcements for our Docker platform? Check out the Codeship Community Forum.

| Reference: | Building a Remote Caching System from our WCG partner Laura Frank at the Codeship Blog blog. |