Node.js: Full application example

This article is part of our Academy Course titled Building web apps with Node.js.

In this course, you will get introduced to Node.js. You will learn how to install, configure and run the server and how to load various modules.

Additionally, you will build a sample application from scratch and also get your hands dirty with Node.js command line programming.

Check it out here!

Table Of Contents

1. Introduction

As we have discussed, Node.js is a javascript runtime. Unlike traditional web servers, there is no separation between the web server and our code and we do not have to customize external configuration files (XML or Property Files) to get the Node.js Web Server up. With Node we can create a web server with minimal code and deliver content to the end users easily and efficiently. In this lesson we will discuss how to create a web server with Node and how to work with static and dynamic file contents, as well as about performance tuning in the Node.js Web server.

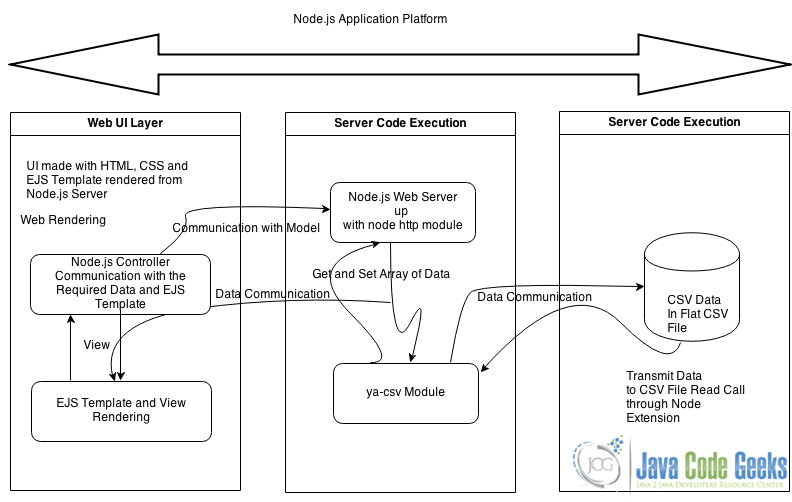

For this application, we will use the Node.js server for the web controller and the contents routing. We will also persist and fetch data through CSV files by using a package (ya-csv) through NPM (Node Package Manager Registry). Finally, we will perform front end rendering with EJS (Embedded JavaScript), which also resides within the node package manager ejs module.

Below is the architechture of the application in a nutshell:

Before going forward (assume we have completed the node.js installation), we need to issue the following command to have all the required libraries.

npm install ya-csv npm install ejs

You can find th code here: download.

2. Application Frontend rendering with EJS

Javascript templates are rendered and cached in the client-side without sending an HTTP request to the server. They are introduced as a layer in HTML in order to separate the HTML string appending from the server side. Additionally, they ar used to get the required data from the server and then render this data according to the pre-defined templates of HTML Fragments.

So here comes EJS (Embedded Javascript). EJS provides flexibility for using JavaScript templates. Some of the EJS Templates are used in this Example Application. EJS is used by loading a template and rendering it with data from server side. Once a template for EJS is loaded, it is cached by default.

Example:

template = new EJS({url: '/firstejstemplate.ejs'})

We can provide extra options as arguments, which configure the following attributes:

- url {string}: Loads template from a file.

- text {string}: Uses the provided text as the template.

- name {string} (optional): An optional name that is used for caching. This defaults to the element ID or the provided URL.

- cache {boolean} (optional): Defaults to true. If we want to disable caching of templates, we make this false.

3. EJS Rendering

There are 2 ways to render a template.

- Invoke method ‘render’, which simply returns the text.

- Invoke method ‘update’, which is used to update the inner HTML of an element.

3.1. render (data) method

As we said, this method enders the template with the provided data.

html = new EJS({url: '/template.ejs'}).render(data)

In our example application we have not used the update method, as we stick to the basics and not provide any ajax functionality.

For example, the code mentioned above is used in the file registeredusers.ejs (HTML Rendering):

<% for(var i=0; i<users.length; i++) { %>

<%= users[i][0] %><%=users[i][1]%><%=users[i][2]%><%=users[i][3]%><%=users[i][4]%>

<% } %>

This is the templating section where a table is rendered based on a javascript array. The array is pre-populated with the values in the Server.

So in server.js (data population section) we have:

- var ejs = require(‘ejs’);: a variable declaration for ejs component.

Data population through server:

ejs.renderFile('content/registeredusers.ejs',{ users : data},

function(err, result) {

// render on success

//console.log("this is result part -->>"+result);

if (!err) {

response.end(result);

}

// render or error

else {

response.end('An error occurred');

console.log(err);

}

});

Here the registeredusers.ejs is a template file. And the users array is populated with some data array from previous section, which we will examine later. So the renderFile method will render the data with the template file through the standard callback function and asynchronous mode.

More on EJS can be found here.

4. The Node.js Server

In the node.js platform, we start up the server as follows:

var http = require('http');

http.createServer(function (request, response) {

....

});

Let’s now discuss the different features in server.js:

- We have used the path module to extract the basename of the path (the final part of the path) and reverse URI encoding from the client with the decodeURI method. We have stored all the static html, css, js and template files (EJS) in a folder named

content.var lookup = path.basename(decodeURI(request.url)) || 'index.html', f = 'content/' + lookup; - We assigned the callback function to http.createServer which provides us access to the standard web request and response objects. We used the request object to find the requested URL and we get it’s basename with ‘path’. We use the decodeURI method which will try to find out the page for the requested URL from the “content” folder. So with the basename we will match the resource as per our request.

- From here we have called additional functions to retrieve data, persist the data or match the filename and send it to for rendering with the loading contents. We have used the

fs(filesystem) module to load the content and then return that content to the createServer callback.// Data fetch from user form posting and persist if (request.method === "POST") { ...Some Code request.on('data', function (chunk) { postData += chunk; ..... Some Code }).on('end', function () { ....some code });or

// Fetching the files and templates from filesystem and render the response to the client if (request.method === "GET") { fs.exists(f, function (exists) { // Use of fileSystem Module ....some code // Serving the file in chunk for response - var s = fs.createReadStream(f).once('open', function () { console.log('stream deliver'); response.writeHead(200, headers); this.pipe(response); }).once('error', function (e) { console.log(e); response.writeHead(500); response.end('Server Error!'); }); ....some code } // Response while no such content found response.writeHead(404); response.end('Page Not Found!'); }); - Now for each of the client request, we should not open the static resources for each call from the client. So we should offer a minimum caching system for this. The application will serve the first request from the static storage, i.e. from the file/s and store it in cache. Afterwards it will serve all subsequent requests for static resources from the cache i.e. from the process memory.

So our bare-bones caching code will look like this:

var cacheObj = { cachestore: {}, maxSize: 26214400, //(bytes) 25mb maxAge: 5400 * 1000, //(ms) 1 and a half hours cleaninterval: 7200 * 1000,//(ms) two hours cleanetimestart: 0, //to be set dynamically clean: function (now) { if ((now - this.cleaninterval) > this.cleanetimestart) { console.log('cleaning data...'); this.cleanetimestart = now; var that = this; Object.keys(this.cachestore).forEach(function (file) { if (now > that.cachestore[file].timestamp + that.maxAge) { delete that.cachestore[file]; } }); } } };We have added the code to read the file just once and the load the contents onto the memory. After that, we will respond to client requests for the file from the memory. Also we have set a maxAge parameter, which is the time interval after which the cache gets cleaned up. We have also defined the maximum file size via the maxSize parameter.

Here is a code snippet with which we store the file in the cache store:

...some code....

fs.stat(f, function (err, stats) {

if (stats.size < cacheObj.maxSize) {

var bufferOffset = 0;

cacheObj.cachestore[f] = {content: new Buffer(stats.size),

timestamp: Date.now()};

s.on('data', function (data) {

data.copy(cacheObj.cachestore[f].content, bufferOffset);

bufferOffset += data.length;

});

}

});

...some code....

Here is a code snippet with which we serve the client requests from the cache store:

fs.exists(f, function (exists) {

if (exists) {

var headers = {'Content-type': mimeTypes[path.extname(f)]};

if (cacheObj.cachestore[f]) {

console.log('cache deliver');

response.writeHead(200, headers);

response.end(cacheObj.cachestore[f].content);

return;

}

....other codes....

Here, the fs variable refers to the “fs” module of node.js and the f refers to file object.

In the above code, first we store the file in the cachestore array of cache object.

The next time any client requests the file, we check that we have the file stored in the cache object and will retrieve an object containing the cached data. In the on callback of the fs.stat method, we have made a call to data.copy in order to store the data in the content property of the cachestore array.

Please note that if we make any changes to the contents of the file, those will not be reflected to the next client, as those file copies are served from the cache memory. We will need to restart the server in order to provide the updated version of the content.

Caching content is certainly an improvement in comparison to reading a file from disk for every request.

5. Use of Streaming Functionality

For better performance, we should stream file from disk and then pipe it directly to the response object, sending data to the network socket one piece at a time. So we have used fs.createReadStream in order to initialize a stream, which is piped to the response object. In this case, the fs.createReadStream will need to interface with the request and the response objects. Those are handled within the http.createServer callback.

Here we have filled the cacheObj[f].content while interfacing with the readStream object.

The code for fs.createReadStream is the following:

var s = fs.createReadStream(f).once('open', function () {

console.log('from stream');

response.writeHead(200, headers);

this.pipe(response);

}).once('error', function (e) {

console.log(e);

response.writeHead(500);

response.end('Server Error!');

});

This will return a readStream object which streams the file that is pointed at by the f variable. readStream emits events that we need to listen to. We listen with the shorthand once:

var s = fs.createReadStream(f).once('open', function () {

//do stuff when the readStream opens

});

Here, for each file, each stream is only going to open once and we do not need to keep listening to it.

On top of that we have implemented error handling with the following piece of code:

var s = fs.createReadStream(f).once('open', function () {

//do work when the readStream opens

}).once('error', function (e) {

console.log(e);

response.writeHead(500);

response.end('Server Error!');

});

Now the stream.pipe or this.pipe method enables us to take our file from the disk and stream it directly to the socket through the standard response object. The pipe method detects when the stream has ended and calls response.end for us.

We had used the data event to capture the buffer as it’s being streamed, and copied it into a buffer that we supplied to cacheObj[f].content. We achieved that by using fs.stat to obtain the file size for the file’s cache buffer.

What we achieved is that instead of the client waiting for the server to load the full file from the disk before sending it back, we used the stream to load the file in small one-by-one pieces. Especially for larger files this is approach is very useful, since there will be a delay time between the file being requested and the client starting to receive the file.

We did this by using fs.createReadStream to start the streaming of the file from the disk. Here the method fs.createReadStream creates the readStream, which inherits from the EventEmitter class. We had used listeners like on to control the flow of stream logic:

s.on('data', function (data) {

....code related to data processing...

});

Then, we added an open event listener using the once method as we want the call for open once it has been triggered. We respond to the open event by writing the headers and using the ‘pipe’ method to send the data to the client. Here stream.pipe handles the data flow.

We also created a buffer with a size (or an array or string) which is the size of the file. To get the size, we used the asynchronous fs.stat and captured the size property in the callback. The data event returns a Buffer as the callback parameter.

Here a file with less than the bufferSize will trigger one data event because the entire file will fit into the first chunk of data. For files greater than bufferSize, we will fill the cacheObj[f].content property one piece at a time.

We managed to copy little binary buffer chunks into the binary cacheObj[f].content buffer. Each time a new chunk comes and gets added to the cacheObj[f].content buffer, we update the bufferOffset variable by adding the length of the chunk buffer to it. When we call the Buffer.copy method on the chunk buffer, we pass the bufferOffset as the second parameter to fill the buffer correctly.

Additionally, we have created a timestamp property into cacheObj. This is for cleaning up the cache, which can be used as an alternative to restarting the server after any future content change.

The clean method in the cache class is as follows:

clean: function(now) {

Object.keys(this.store).forEach(function (file) {

if (now > that.cachestore[file].timestamp + that.maxAge) {

delete that.cachestore[file];

}

});

}

Here we have checked the maxAge property for all the objects in the cache store and simply deleted the object after maxAge expires for an object. We have called the cache.clean at the bottom of the server like this: cacheObj.clean(Date.now());

cacheObj.clean loops through cache.store and checks to see if the cached objects have exceeded their specified lifetime. We set the maxAge parameter as a constant for the cleanup time. If a cache objects has expired, we remove it from the store.

We had added the cleaninterval to specify how long to wait between cache cleans. The cleanetimestart is used to determine how long it has been since the cache was last cleaned.

The code for that is the following:

cleaninterval: 7200 * 1000,//(ms) two hours cleanetimestart: 0, //to be set dynamically

The check is in the clean method is as follows:

if (now - this.cleaninterval > this.cleanetimestart) {

//Do the work

}

Here the clean will be called and clean the cache store only if it has been longer than cleaninterval variable value after the last clean.

The above code was a simple example of building an minimal cacheObj object within the node.js server.

5.1. The model part – csv handling

While we are getting the data from the post method, we are opening a csv file in append mode. Then we have written the data from post parameters to the csv file. After that, we have read the data and render the data with EJS template and send it to the client.

Below is the code snippet along with the relevant documentation:

if (request.method === "POST") { // Checking the request method.

var postData = '';

request.on('data', function (chunk) {

postData += chunk; // accumulate the data with the 'chunk'

console.log('postData.length-->>'+postData.length);

if (postData.length > maxData) { // checking for a max size of data

postData = '';

this.pause();

response.writeHead(413); // Request Entity Too Large

response.end('Too large');

}

}).on('end', function () { // listener for the signal of end of data.

if (!postData) { response.end(); return; } //prevents empty post requests from crashing the server

var postDataObject = querystring.parse(postData);

var writeCSVFile = csv.createCsvFileWriter('users.csv', {'flags': 'a'});

// opening the file in append mode

var data = [postDataObject.name,postDataObject.email,postDataObject.location,postDataObject.mobileno,postDataObject.notes]; // preparation of the data array

writeCSVFile.writeRecord(data); // Writing to the CSV file

console.log('User Posted 1234:\\n', JSON.stringify(util.inspect(postDataObject)));

var readerCSV = csv.createCsvFileReader('users.csv'); // Opening of the file

var data = [];

readerCSV.on('data', function(rec) {

data.push(rec); // getting all data in memory

}).on('end', function() {

console.log(data);

ejs.renderFile('content/registeredusers.ejs',{ users : data}, // Rendering of the data with corresponding EJS template

function(err, result) {

// render on success

//console.log("this is result part -->>"+result);

if (!err) {

response.end(result);

}

// render or error

else {

response.end('An error occurred');

console.log(err);

}

});

});

});

To sum up, in this article, we have discussed about:

- The Node.js web server creation.

- A minimal cache object creation for data handling.

- Streaming of data for optimised performance.

- Handling data from a GET request.

- Handling data from a POST request.

- A dynamic router for controlling navigation.

There is certainly room for improvement of the node.js code. For example, we could add separation of the CSV handling code in a separate model layer, something that would make the design more elegant, and functional separation of the Node Middleware components, like the caching and independent model handling. These enhancements are left to the reader as exercise.

6. Download the Source Code

In this tutorial we discussed building a full application in Node.js. You may download the source code here: Nodejs_full-app.zip