Python: scikit-learn – Training a classifier with non numeric features

Following on from my previous posts on training a classifier to pick out the speaker in sentences of HIMYM transcripts the next thing to do was train a random forest of decision trees to see how that fared.

I’ve used scikit-learn for this before so I decided to use that. However, before building a random forest I wanted to check that I could build an equivalent decision tree.

I initially thought that scikit-learn’s DecisionTree classifier would take in data in the same format as nltk’s so I started out with the following code:

import json

import nltk

import collections

from himymutil.ml import pos_features

from sklearn import tree

from sklearn.cross_validation import train_test_split

with open('data/import/trained_sentences.json', 'r') as json_file:

json_data = json.load(json_file)

tagged_sents = []

for sentence in json_data:

tagged_sents.append([(word['word'], word['speaker']) for word in sentence['words']])

featuresets = []

for tagged_sent in tagged_sents:

untagged_sent = nltk.tag.untag(tagged_sent)

sentence_pos = nltk.pos_tag(untagged_sent)

for i, (word, tag) in enumerate(tagged_sent):

featuresets.append((pos_features(untagged_sent, sentence_pos, i), tag) )

clf = tree.DecisionTreeClassifier()

train_data, test_data = train_test_split(featuresets, test_size=0.20, train_size=0.80)

>>> train_data[1]

({'word': u'your', 'word-pos': 'PRP$', 'next-word-pos': 'NN', 'prev-word-pos': 'VB', 'prev-word': u'throw', 'next-word': u'body'}, False)

>>> clf.fit([item[0] for item in train_data], [item[1] for item in train_data])

Traceback (most recent call last):

File '<stdin>', line 1, in <module>

File '/Users/markneedham/projects/neo4j-himym/himym/lib/python2.7/site-packages/sklearn/tree/tree.py', line 137, in fit

X, = check_arrays(X, dtype=DTYPE, sparse_format='dense')

File '/Users/markneedham/projects/neo4j-himym/himym/lib/python2.7/site-packages/sklearn/utils/validation.py', line 281, in check_arrays

array = np.asarray(array, dtype=dtype)

File '/Users/markneedham/projects/neo4j-himym/himym/lib/python2.7/site-packages/numpy/core/numeric.py', line 460, in asarray

return array(a, dtype, copy=False, order=order)

TypeError: float() argument must be a string or a numberIn fact, the classifier can only deal with numeric features so we need to translate our features into that format using DictVectorizer.

from sklearn.feature_extraction import DictVectorizer vec = DictVectorizer() X = vec.fit_transform([item[0] for item in featuresets]).toarray() >>> len(X) 13016 >>> len(X[0]) 7302 >>> vec.get_feature_names()[10:15] ['next-word-pos=EX', 'next-word-pos=IN', 'next-word-pos=JJ', 'next-word-pos=JJR', 'next-word-pos=JJS']

We end up with one feature for every key/value combination that exists in featuresets.

I was initially confused about how to split up training and test data sets but it’s actually fairly easy – train_test_split allows us to pass in multiple lists which it splits along the same seam:

vec = DictVectorizer() X = vec.fit_transform([item[0] for item in featuresets]).toarray() Y = [item[1] for item in featuresets] X_train, X_test, Y_train, Y_test = train_test_split(X, Y, test_size=0.20, train_size=0.80)

Next we want to train the classifier which is a couple of lines of code:

clf = tree.DecisionTreeClassifier() clf = clf.fit(X_train, Y_train)

I wrote the following function to assess the classifier:

import collections

import nltk

def assess(text, predictions_actual):

refsets = collections.defaultdict(set)

testsets = collections.defaultdict(set)

for i, (prediction, actual) in enumerate(predictions_actual):

refsets[actual].add(i)

testsets[prediction].add(i)

speaker_precision = nltk.metrics.precision(refsets[True], testsets[True])

speaker_recall = nltk.metrics.recall(refsets[True], testsets[True])

non_speaker_precision = nltk.metrics.precision(refsets[False], testsets[False])

non_speaker_recall = nltk.metrics.recall(refsets[False], testsets[False])

return [text, speaker_precision, speaker_recall, non_speaker_precision, non_speaker_recall]We can call it like so:

predictions = clf.predict(X_test)

assessment = assess('Decision Tree', zip(predictions, Y_test))

>>> assessment

['Decision Tree', 0.9459459459459459, 0.9210526315789473, 0.9970134395221503, 0.9980069755854509]Those values are in the same ball park as we’ve seen with the nltk classifier so I’m happy it’s all wired up correctly.

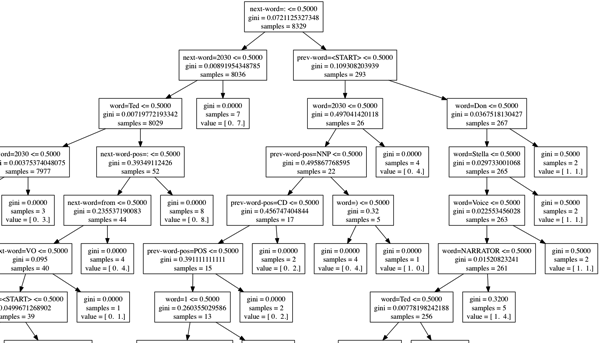

The last thing I wanted to do was visualise the decision tree that had been created and the easiest way to do that is export the classifier to DOT format and then use graphviz to create an image:

with open('/tmp/decisionTree.dot', 'w') as file:

tree.export_graphviz(clf, out_file = file, feature_names = vec.get_feature_names())dot -Tpng /tmp/decisionTree.dot -o /tmp/decisionTree.png

The decision tree is quite a few levels deep so here’s part of it:

The full script is on github if you want to play around with it.

| Reference: | Python: scikit-learn – Training a classifier with non numeric features from our WCG partner Mark Needham at the Mark Needham Blog blog. |