Working with DynamoDB

Recently, I worked on an IoT-based project where we had to store time-series data coming from multiple sensors and show real-time data to an enduser. Further, we needed to generate reports and analyze gathered information.

To deal with the continuous data flowing from the sensors, we chose DynamoDB for storage. DynamoDB promises to handle large data with single-digit millisecond latency, at any scale. Since it’s a fully managed database service, we never had to worry about scaling, architecture, and hardware provisioning. Plus, we were using AWS IoT for sensors, so choosing a NoSQL database from AWS services like DynamoDB was the right decision.

Here is what Amazon says about DynamoDB:

Fully managed NoSQL database service that provides fast and predictable performance with seamless scalability.

The Basics

DynamoDB tables are made of items, which are similar to rows in relational databases, and each item can have more than one attribute. These attributes can be either scalar types or nested.

Everything starts with the Primary Key Index

Each item has one attribute as the Primary Key Index that uniquely identifies the item. The Primary Key is made of two parts: the Partition Key (Hash Key) and the Sort Key (Range Key); where the Range Key is optional. DynamoDB doesn’t just magically spread the data into multiple different servers to boost performance, it relies on partitioning to achieve that.

Partitioning is similar to the concept of sharding seen in MongoDB and other distributed databases where data is spread across different database servers to distribute load across multiple servers to give consistent high performance. Now think of partitions as similar to shards and the Hash Key specified in the Primary Key determines in which shard the item will be stored.

In order to determine in which partition the item will be stored, the Hash Key is passed to a special hash function which ensures that all items are evenly spread across all partitions. This also explains why it is called a Partition Key or Hash Key. The Sort Key on the other hand, determines the order of items being stored and allows DynamoDB to have more than one item with the same Hash Key.

The Sort Key, when present and combined with the Partition Key (Hash Key) forms the Primary Key Index, which is used to uniquely identify a particular item.

This is very useful for time series data such as the price of stocks, where the price of one stock item changes over time and you need to track the price in comparison to the stock. In such cases, the stock name can be the Partition Key and the date can be used as a Range Key to sort data according to time.

Secondary indexes

Primary indexes are useful to identify items and allow us to store infinitely large amounts of data without having to worry about performance or scaling, but soon you will realize that querying data becomes extremely difficult and inefficient.

Having worked with relational databases mostly, querying with DynamoDB was the most confusing aspect. You don’t have joins or views as in relational databases; denormalization helps, but not much.

Secondary indexes in DynamoDB follow the same structure as the Primary Key Index, where one part is the Partition Key and the second part is the Sort Key, which is optional. Two types of secondary indexes are supported by DynamoDB: the Local Secondary Index and the Global Secondary Index.

Local Secondary Index (LSI):

The Local Secondary Index is a data structure that shares the Partition Key defined in the Primary Index, and allows you to define the Sort Key with an attribute other than the one defined in the Primary Index. The Sort Key attribute must be of scalar type.

While creating the LSI, you define attributes to be projected other than the Partition Key and the Sort Key, and the LSI maintains projected attributes along with the Partition Key and Sort Key. The LSI data and the table data for each item is stored inside the same partition.

Global Secondary Index (GSI):

You will need to query data from a different attribute than the Partition Key. You can achieve this by creating a Global Secondary Index for that attribute. GSI follows the same structure as the Primary Key, though it has a different Partition Key than the Primary Index and can optionally have one Sort Key.

Similar to the LSI, attributes to be projected need to be specified while creating the GSI. Both the Partition Key attribute and Sort Key attribute need to be scalar.

You definitely should look up the official documentation for GSI and LSI to understand how indexes work.

!Sign up for a free Codeship Account

Setup for DynamoDB

DynamoDB doesn’t require special setup, as it is a web service fully managed by AWS. You just need API credentials to start working with DynamoDB. There are two primary ways you can interact with DynamoDB, using AWS SDK for Ruby or Dynamoid.

Both libraries are quite good, and Dynamoid offers an Active Record kind of interface. But to get an overview of how DynamoDB works, it’s better to start with AWS SDK for Ruby.

In Gemfile,

gem 'aws-sdk', '~> 2'

First of all, you need to initialize a DynamoDB client, preferably via an initializer so as to avoid instantiating a new client for every request you make to DynamoDB.

# dynamodb_client.rb

$ddb = Aws::DynamoDB::Client.new({

access_key_id: ENV['AWS_ACCESS_KEY_ID'],

secret_access_key: ENV['AWS_SECRET_ACCESS_KEY'],

region: ENV['AWS_REGION']

})AWS provides a downloadable version of DynamoDB, ‘DynamoDB local’, which can be used for development and testing. First, download the local version and follow the steps specified in the documentation to set up and run it on a local machine.

To use it, just specify an endpoint in the DynamoDB client initialization hash as shown below:

# dynamodb_client.rb

$ddb = Aws::DynamoDB::Client.new({

access_key_id: ENV['AWS_ACCESS_KEY_ID'],

secret_access_key: ENV['AWS_SECRET_ACCESS_KEY'],

region: ENV['AWS_REGION'],

endpoint: 'http://localhost:8000'

})The DynamoDB local server runs on port 8000 by default.

Put it all together with this simple example

Suppose you need to store the information of users along with shipping addresses for an ecommerce website. The users table will hold information such as first_name, last_name, email, profile_pictures, authentication_tokens, addresses, and much more.

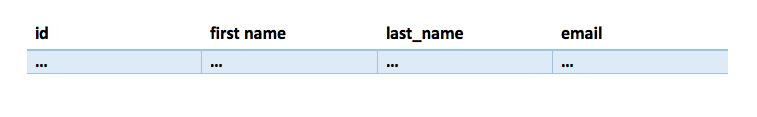

In relational databases, a users table might look like this:

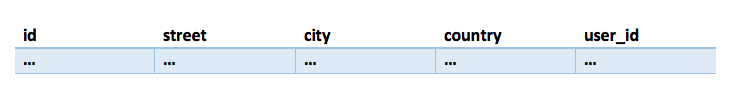

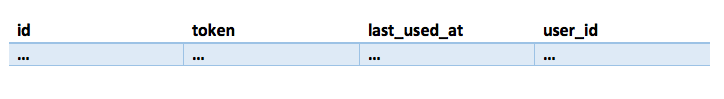

And addresses and authentication tokens will need to be placed separately in other tables with the id of a user as Foreign Key:

In DynamoDB, there is no concept of Foreign Keys and no joins. There are ways to reference data related to an item from another table as we do in relational databases, but it’s not efficient. A better way would be to denormalize data into a single users table. As DynamoDB is a key value store, each item in a users table would look as shown below:

{

"first_name": "string",

"last_name": "string",

"email": "string",

"created_at": "Date",

"updated_at": "Date",

"authentication_tokens": [

{

"token": "string",

"last_used_at": "Date"

}

],

"addresses": [

{

"city": "string",

"state": "string",

"country": "string"

}

]

}Make email as the Partition Key of the Primary Key and leave the Range Key optional, as each user will have a unique email id, and we definitely need to look up a user having a particular email id.

In the future, you might need to search users with first_name or last_name. This requirement makes first_name and last_name ideal candidates for the Range Key. Additionally, you may want to get users registered on a particular date or updated on a particular date, which can be found with created_at and updated_at fields, making them ideal for the Partition Key in the Global Secondary Index.

For now, we will make one Global Secondary Index (GSI), where created_at will be the Partition Key and first_name will be Range Key, allowing you to run queries like:

select users.* where users.created_at = ' xxx ' and users.first_name starts_with(' xxx ')Basic CRUD Operations

All logic related to persistence and querying stays in the model, so following that convention, first create the class User and include ActiveModel::Model and ActiveModel::Serialization modules inside the class.

ActiveModel::Model adds callbacks, validations, and an initializer. The main purpose for adding it is to initialize the User model with parameters hash like Active Record does.

ActiveModel::Serialization provides serialization helper methods such as to_json, as_json, and serializable_hash for objects of the User class. After adding these two modules, you can specify the attributes related to the User model with attr_accessor method. At this point, the User model looks like this:

# models/user.rb

class User

include include ActiveModel::Model, ActiveModel::Serialization

attr_accessor :first_name, :last_name, :email, :addresses, :authentication_tokens, :created_at, :updated_at

endYou can create User objects with User.new, pass parameters hash, serialize, and deserialize it, but you cannot persist them in DynamoDB. To be able to persist the data, you will need to create a table in DynamoDB and allow the model to know about and access that table.

I prefer to create a migrate_table! class method where I put the logic required for table creation. If the table already exists, it will be recreated, and the application should wait until the table is created as table creation on DynamoDB takes around a few minutes.

# models/user.rb

...

def self.migrate_table

$ddb.delete_table(table_name: table_name) if $ddb.list_tables.table_names.include?(table_name)

create_table_params = {

table_name: table_name,

# array of attributes name and their type that describe schema for the Table and Indexes

attribute_definitions: [

{

attribute_name: "first_name",

attribute_type: "S"

},

{

attribute_name: "created_at",

attribute_type: "S"

},

{

attribute_name: "email",

attribute_type: "S"

},

],

# key_schema specifies the attributes that make up the primary key for a table

# HASH - specifies Partition Key

# RANGE - specifies Range Key

# key_type can be either HASH or RANGE

key_schema: [

{

attribute_name: "email",

key_type: "HASH",

}

],

# global_secondary_indexes array specifies one or more keys that makes up index,

# with name of index and provisioned throughput for global secondary indexes

global_secondary_indexes: [

index_name: "created_at_first_name_index",

key_schema: [

{

attribute_name: "created_at",

key_type: "HASH"

},

{

attribute_name: "first_name",

key_type: "RANGE"

}

],

# Projection - Specifies attributes that are copied (projected) from the table into the index.

# Allowed values are - ALL, INCLUDE, KEYS_ONLY

# KEYS_ONLY - only the index and primary keys are projected into the index.

# ALL - All of the table attributes are projected into the index.

# INCLUDE - Only the specified table attributes are projected into the index. The list of projected attributes are then needs to be specified in non_key_attributes array

projection: {

projection_type: "ALL"

},

# Represents the provisioned throughput settings for specified index.

provisioned_throughput: {

read_capacity_units: 1,

write_capacity_units: 1

}

],

# Represents the provisioned throughput settings for specified table.

provisioned_throughput: {

read_capacity_units: 1,

write_capacity_units: 1,

}

}

$ddb.create_table(create_table_params)

# wait till table is created

$ddb.wait_until(:table_exists, {table_name: "movies"})

end

...Creating an Item

DynamoDB provides the #put_item method, which creates a new item with passed attributes and the Primary Key. If an item with the same Primary Key exists, it is replaced with new item attributes.

# models/user.rb

class User

...

def save

item_hash = instance_values

begin

resp = $ddb.put_item({

table_name: self.table_name,

item: item_hash,

return_values: 'NONE'

})

resp.successful?

rescue Aws::DynamoDB::Errors::ServiceError => e

false

end

end

...

endThe instance method save simply saves an item and returns either true or false depending upon the response. The instance_values method returns hash of all the attr_accessor fields, which is passed to the item key as item_hash.

The return_values option inside the put_item request determines whether you want to receive a saved item or not. We are just interested in knowing whether an item is saved successfully or not, hence ‘NONE’ was passed.

Reading an item

Getting an item from DynamoDB with the Primary Key is similar to the way records are found by ids by Active Record in relational databases.

The #get_item method is used to fetch a single item for a given Primary Key. If no item is found, then the method returns nil in the item’s element of response.

# models/user.rb

...

def self.find(email)

if email.present?

begin

resp = $ddb.get_item({

table_name: self.table_name,

key: {

email: email

}

})

resp.item

rescue Aws::DynamoDB::Errors::ServiceError => e

nil

end

else

nil

end

end

...Updating an item

An item is updated with the #update_item method, which behaves more like the upsert (update or insert) of PostgreSQL. In other words, it updates an item with given attributes. But in case no item is found with those attributes, a new item is created. This might sound similar to how #put_item works, but the difference is that #put_item replaces an existing item, whereas #update_item updates an existing item.

# models/user.rb

def update(attrs)

item_hash = attrs

item_hash['updated_at'] = DateTime.current.to_s

item_hash.keys.each do |key|

item_hash[key] = {

'value' => item_hash[key],

'action' => 'PUT'

}

end

begin

resp = $ddb.update_item({

table_name: self.class.table_name,

key: {

email: email

},

attribute_updates: item_hash

})

resp.successful?

rescue Aws::DynamoDB::Errors::ServiceError => e

false

end

endWhile updating an item, you need to specify the Primary Key of that item and whether you want to replace or add new values to an existing attribute.

Look closely at how item_hash is formed. The attribute hash is passed normally to update the method, which is processed further to add two fields — value and action — in place of simple key => value pairs. This modifies a given simple hash as shown below to attribute_updates key compatible values.

Deleting an item

The #delete_item method deletes items with a specified Primary Key. If the item is not present, it doesn’t return an error.

# models/user.rb

def delete

if email.present?

begin

resp = $ddb.delete_item({

table_name: self.class.table_name,

key: {

email: email

}

})

resp.successful?

rescue Aws::DynamoDB::Errors::ServiceError => e

false

end

else

false

end

endConditional Writes

All DynamoDB operations can be categorized into two types: read operations, such as get_item, and write operations, such as put_item, update_item, and delete_item.

These write operations can be constrained with specified conditions, such as put_item, and should be performed only if a certain item with the same Primary Key does not exist. All write operations support these kinds of conditional writes.

For example, if you want to create an item only if it isn’t present, you can add attribute_not_exists(attribute_name) as a value of the condition_expression key in the #put_item method params.

def save!

item_hash = instance_values

item_hash['updated_at'] = DateTime.current.to_s

item_hash['created_at'] = DateTime.current.to_s

begin

resp = $ddb.put_item({

table_name: self.class.table_name,

item: item_hash,

return_values: 'NONE',

condition_expression: 'attribute_not_exists(email)'

})

resp.successful?

rescue Aws::DynamoDB::Errors::ServiceError => e

false

end

endRuby SDK v2 provides an interface to interact with DynamoDB, and you can read more about the methods provided in the given SDK documentation.

Batch Operations

Apart from four basic CRUD operations, DynamoDB provides two types of batch operations:

#batch_get_item– This can be used to read a maximum of 100 items from one or more tables.- ie, you can batch up to 100

#get_itemcalls with a single#batch_get_item.

- ie, you can batch up to 100

#batch_write_item– This can be used to perform write operations up to a maximum of 25 items in a single#batch_write_item.- Batch Write Item can perform any write operation such as

#put_item,#update_item, or#delete_itemfor one or more tables.

- Batch Write Item can perform any write operation such as

You can read more about batch operations in the AWS developer guide.

Query and Scan Operations

Batch operations are not very useful for querying data, so DynamoDB provides Query and Scan for fetching records.

- Query: Lets you fetch records based on the Partition Key and the Hash Key of the Primary or Secondary Indexes.

- You need to pass the Partition Key, a single value for that Partition Key, and optionally the Range Key with normal comparison operators (such as

=,>, and<) if you want to further narrow down the results.

- You need to pass the Partition Key, a single value for that Partition Key, and optionally the Range Key with normal comparison operators (such as

- Scan: A Scan operation reads every item in a table or a secondary index, either in ascending or descending order.

Both the Query and Scan operations allow filters to filter the result returned from the operation.

Conclusion

The fully managed NoSQL database service DynamoDB is good if you don’t need to worry about scaling and handling large amounts of data. Even though it promises the high performance of single-digit milliseconds and is infinitely scalable, you need to be careful when designing table architecture and choosing Primary Key and Secondary Indexes, otherwise, you can lose these benefits and costs may go high.

Ruby SDK v2 provides an interface to interact with DynamoDB, and you can read more about methods provided in the given SDK Documentation. Also, the Developer Guide is a perfect place to understand DynamoDB in depth.

| Reference: | Working with DynamoDB from our WCG partner Parth Modi at the Codeship Blog blog. |